Published

- 5 min read

Key Takeaways from Yann LeCun's Vision of AI's Future

Introduction

This blog post is based on a video by AI pioneer Yann LeCun, where he shares his vision for the future of AI. I’ve distilled the key takeaways from his talk into a simple, easy-to-understand format. I hope this helps you gain a deeper understanding.

You can also watch the original video:

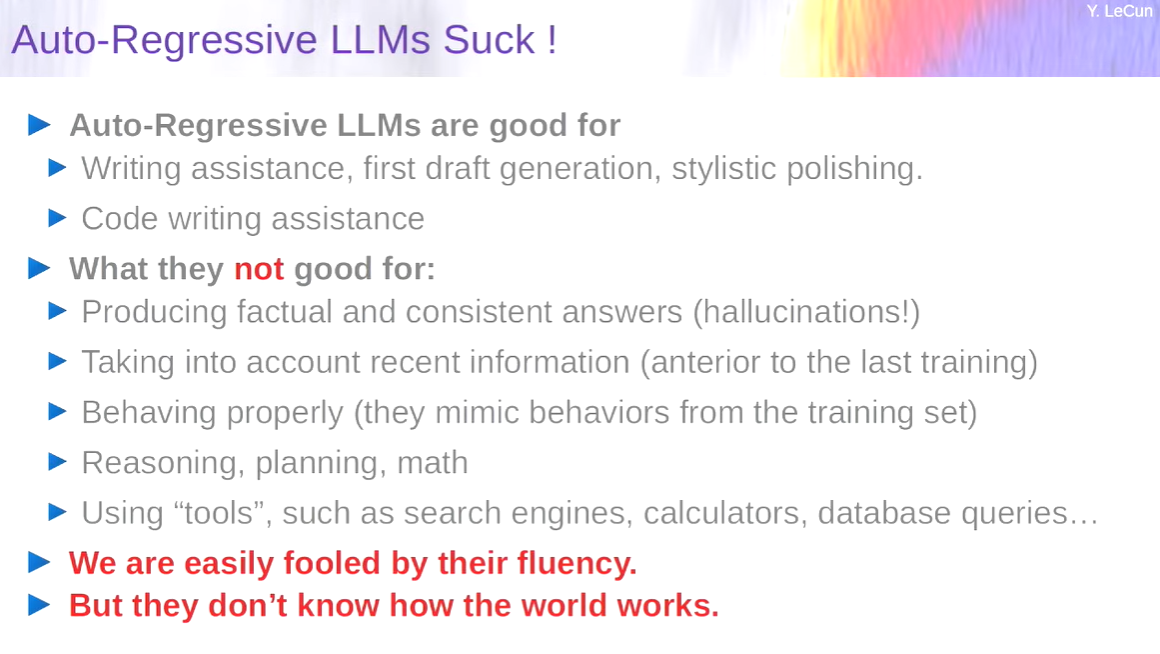

Understanding AI’s Core: Auto-Regressive Models

- What Are They: AI predicts what comes next based on previous information.

- Analogy: Like guessing the next word in a sentence you’re reading.

Auto-regressive models are AI’s way of predicting the future based on the past. Think of it like your phone’s predictive text – it suggests the next word based on what you’ve already typed. While this works well for structured tasks, it’s not foolproof, and the complexity of human language can trip it up, leading to errors or “hallucinations.”

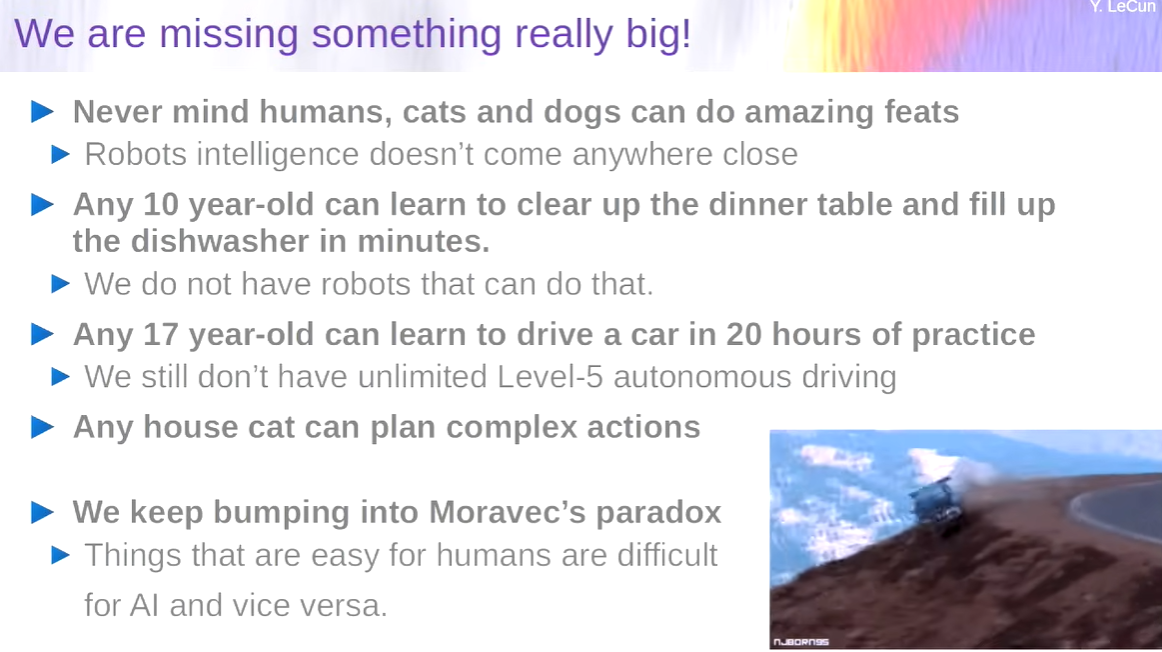

The ‘Common Sense’ Challenge in AI

-

AI ‘Common Sense’: Not instinctual; it’s about processing and reacting to data.

-

Human vs. AI Sense: AI lacks the wide-ranging, intuitive grasp of the world humans have.

-

Specialization: AI shines in specific tasks but falters in generalized understanding.

Unlike humans, AI doesn’t come pre-equipped with ‘common sense.’ Its understanding is limited to the data it’s been fed. It’s like teaching someone to cook using only recipes—they can follow steps but can’t improvise a meal with random fridge leftovers. AI’s common sense is akin to this recipe-following ability.

AI Myths: General Intelligence vs. Specialization

- General Intelligence: The idea that AI can know and do everything well is a myth.

- Human Parallels: We’re all specialized; expertise in one area doesn’t equal universal knowledge.

- AGI’s Reality: True Artificial General Intelligence (AGI) remains a distant goal.

The notion of a machine possessing general intelligence, being a Jack-of-all-trades, is still in the realm of science fiction. AI, as of now, excels in specific domains—it can beat grandmasters in chess but can’t necessarily navigate daily life like humans. It’s this distinction between narrow AI expertise and the broader general intelligence that we must appreciate.

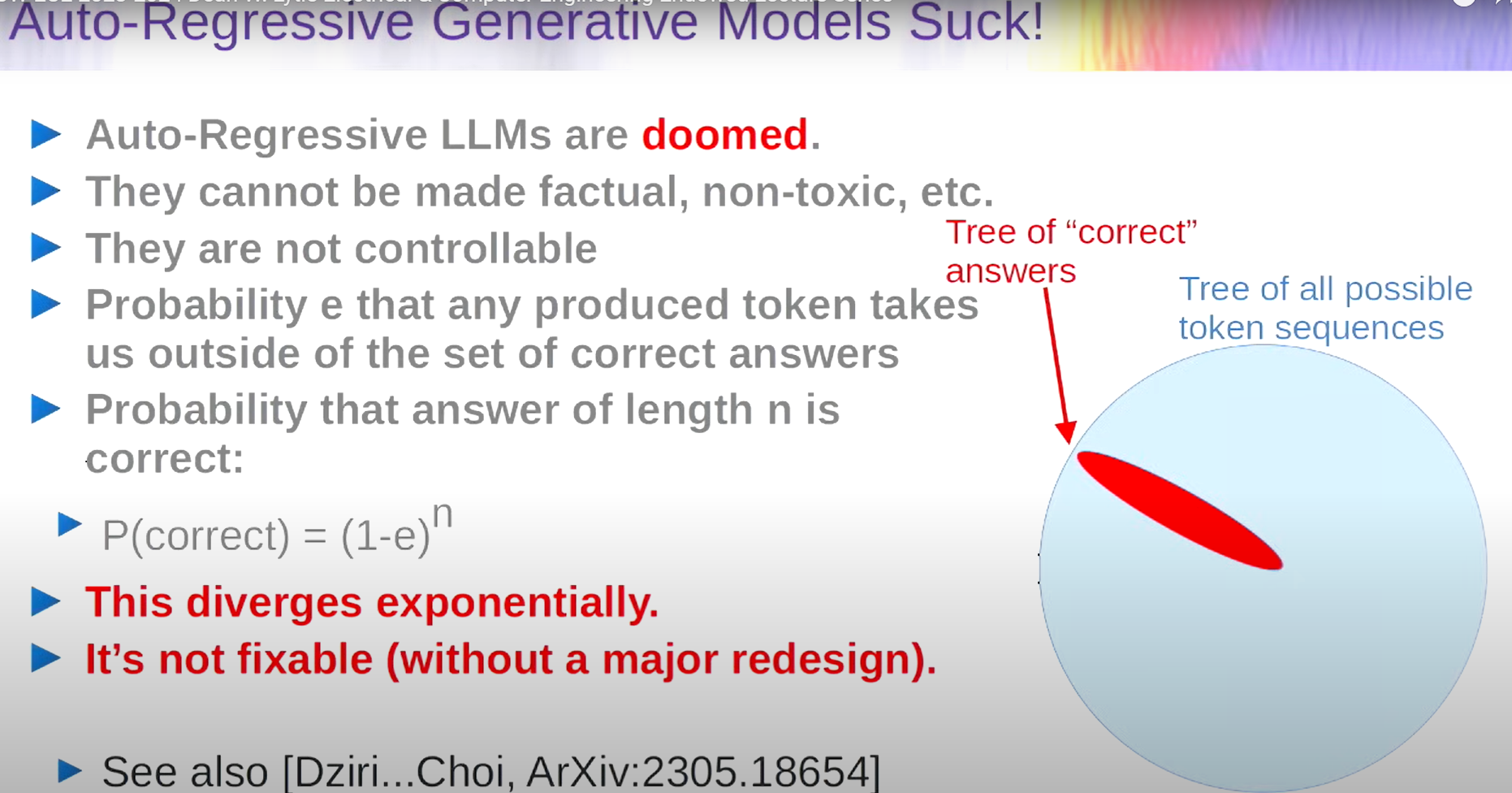

AI’s ‘Hallucinations’ and Decision-Making

- Hallucinations in AI: When AI makes up information not based in reality.

- Decision Making: Current AI lacks the ability to plan or reason like humans do.

- Understanding Errors: A small mistake can lead AI to a completely wrong conclusion.

When AI makes what we call a ‘hallucination,’ it’s essentially making a guess without enough correct information, much like if someone asked you to finish a sentence in a language you barely know. The decision-making process in AI is currently a one-step-at-a-time approach without a clear foresight, which can lead to cascading errors in its output.

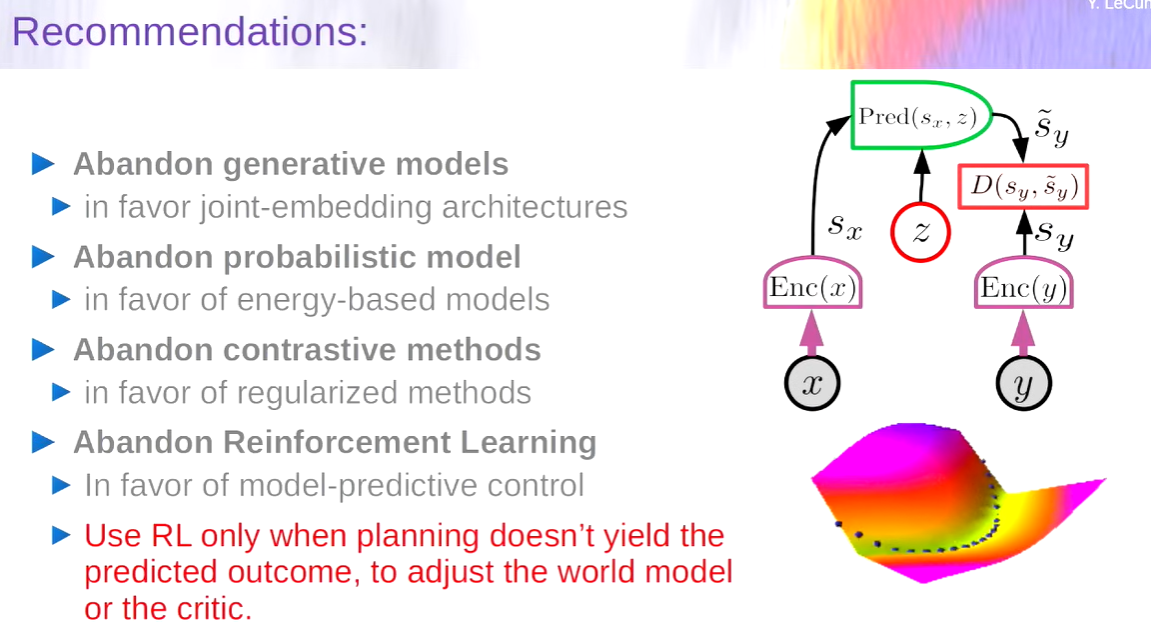

Rethinking AI Development

- New Architecture: Shift from generative to joint-embedding architectures for better results.

- Model-Predictive Control: A method allowing AI to plan and decide more effectively.

Moving away from traditional generative models to joint-embedding architectures could allow AI to make more nuanced decisions. It’s a bit like planning a road trip using a detailed map rather than just following the road signs. This model-predictive control could be a game-changer for AI’s ability to plan ahead.

AI as a Universal Interface

- Future Role: AI could mediate our interaction with the digital world.

- Cultural Repository: AI has the potential to hold all human knowledge and culture.

- Open Source: Essential for ensuring diversity and widespread access.

In the future, AI might become the middleman between us and the digital world, holding the key to all human knowledge and culture. This necessitates an open-source approach to AI development to keep this knowledge free and accessible, much like the internet is today.

Implications for Industry and Policy

- Common Platforms: AI systems will need to be open and accessible.

- Shared Infrastructure: Like the internet, AI could be a shared digital space.

- Crowd-Sourced Training: Public contribution to AI learning for better customization.

The vision for AI is to become a shared digital foundation upon which diverse applications are built. This means the training and fine-tuning of AI would be a collective effort, harnessing the expertise and input of people worldwide to create a system that’s as diverse and versatile as the human population itself.

Countering AI Doomsday Predictions

- Speculations vs. Reality: Concerns about AI leading to human extinction are largely speculative.

- Safe AI Development: It’s possible and necessary, akin to building reliable airplanes.

- Time Investment: Developing robust AI systems will take time and care.

The fear that AI could lead to our downfall is more a work of fiction than a likely future. Just as we’ve developed safe air travel after many years, we can build safe AI systems through careful engineering. It’s a process that will undoubtedly take time and patience, but it’s entirely within our reach.

Aligning AI with Human Values

- The Alignment Problem: Ensuring AI decisions align with human objectives and ethics.

- Benefit Amplification: AI has the potential to enhance human intelligence and capabilities.

- Societal Impact: Comparable to the printing press, AI could herald a new era of enlightenment.

The alignment problem is about making sure AI’s decisions match our values and ethics. Solving this is as much a technical challenge as a philosophical one. The potential benefits are enormous, with AI poised to enhance human intelligence and productivity, much like the printing press revolutionized the spread of knowledge.

Conclusion

And there we have it—a glimpse into the intricate world of AI through the lens of an expert, repackaged here with simplicity and clarity. Yann LeCun’s insights remind us that AI is not just a scientific endeavor but also a reflection of our human ambition to create and understand.

My perspective, shaped by these notes, leans towards cautious optimism. AI, with all its flaws and potential, is like a mirror to our own complexity. It’s a journey that we are all a part of, and together, we can steer it towards a future that amplifies the best of human intelligence and creativity.